Meet the Gatekeepers of Students’ Private Lives

By Mark Keierleber | May 2, 2022

If you are in crisis, please call the National Suicide Prevention Lifeline at 1-800-273-TALK (8255), or contact the Crisis Text Line by texting TALK to 741741.

Megan Waskiewicz used to sit at the top of the bleachers, rest her back against the wall and hide her face behind the glow of a laptop monitor. While watching one of her five children play basketball on the court below, she knew she had to be careful.

The mother from Pittsburgh didn’t want other parents in the crowd to know she was also looking at child porn.

Waskiewicz worked as a content moderator for Gaggle, a surveillance company that monitors the online behaviors of some 5 million students across the U.S. on their school-issued Google and Microsoft accounts. Through an algorithm designed to flag references to sex, drugs, and violence and a team of content moderators like Waskiewicz, the company sifts through billions of students’ emails, chat messages and homework assignments each year. Their work is supposed to ferret out evidence of potential self-harm, threats or bullying, incidents that would prompt Gaggle to notify school leaders and, in some cases, the police.

As a result, kids’ deepest secrets — like nude selfies and suicide notes — regularly flashed onto Waskiewicz’s screen. Though she felt “a little bit like a voyeur,” she believed Gaggle helped protect kids. But mostly, the low pay, the fight for decent hours, inconsistent instructions and stiff performance quotas left her feeling burned out. Gaggle’s moderators face pressure to review 300 incidents per hour and Waskiewicz knew she could get fired on a moment’s notice if she failed to distinguish mundane chatter from potential safety threats in a matter of seconds. She lasted about a year.

“In all honesty I was sort of half-assing it,” Waskiewicz admitted in an interview with The 74. “It wasn’t enough money and you’re really stuck there staring at the computer reading and just click, click, click, click.”

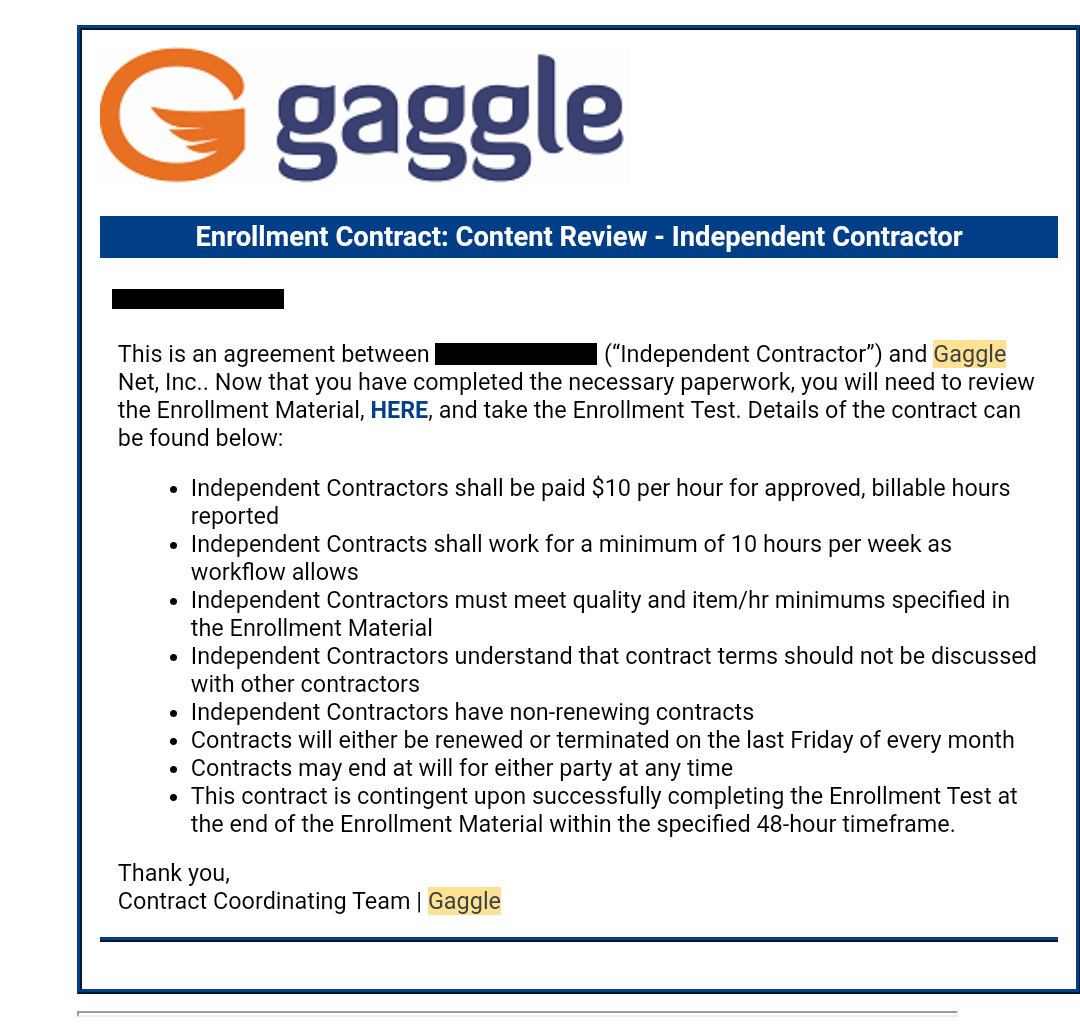

Content moderators like Waskiewicz, hundreds of whom are paid just $10 an hour on month-to-month contracts, are on the front lines of a company that claims it saved the lives of 1,400 students last school year and argues that the growing mental health crisis makes its presence in students’ private affairs essential. Gaggle founder and CEO Jeff Patterson has warned about “a tsunami of youth suicide headed our way” and said that schools have “a moral obligation to protect the kids on their digital playground.”

Eight former content moderators at Gaggle shared their experiences for this story. While several believed their efforts in some cases did shield kids from serious harm, they also surfaced significant questions about the company’s efficacy, its employment practices and its effect on students’ civil rights.

Among the moderators who worked on a contractual basis, none had prior experience in school safety, security or mental health. Instead, their employment histories included retail work and customer service, but they were drawn to Gaggle while searching for remote jobs that promised flexible hours.

They described an impersonal and cursory hiring process that appeared automated. Former moderators reported submitting applications online and never having interviews with Gaggle managers — either in-person, on the phone or over Zoom — before landing jobs.

Once hired, moderators reported insufficient safeguards to protect students’ sensitive data, a work culture that prioritized speed over quality, scheduling issues that sent them scrambling to get hours and frequent exposure to explicit content that left some traumatized. Contractors lacked benefits including mental health care and one former moderator said he quit after repeated exposure to explicit material that so disturbed him he couldn’t sleep and without “any money to show for what I was putting up with.”

Gaggle content moderators encompass as many as 600 contractors at any given time and just two dozen work as employees who have access to benefits and on-the-job training that lasts several weeks. Gaggle executives have sought to downplay contractors’ role with the company, arguing they use “common sense” to distinguish false flags generated by the algorithm from potential threats and do “not require substantial training.”

While the experiences reported by Gaggle’s moderator team resemble those of social media platforms like Meta-owned Facebook, Patterson said his company relies on “U.S.-based, U.S.-cultured reviewers as opposed to outsourcing that work to India or Mexico or the Philippines,” as the social media giant does. He rebuffed former moderators who said they lacked sufficient time to consider the severity of a particular item.

“Some people are not fast decision-makers. They need to take more time to process things and maybe they’re not right for that job,” he told The 74. “For some people, it’s no problem at all. For others, their brains don’t process that quickly.”

Executives also sought to minimize the contractors’ access to students’ personal information; a spokeswoman said they only see “small snippets of text” and lacked access to what’s known as students’ “personally identifiable information.” Yet former contractors described reading lengthy chat logs, seeing nude photographs and, in some cases, coming upon students’ names. Several former moderators said they struggled to determine whether something should be escalated as harmful due to “gray areas,” such as whether a Victoria’s Secret lingerie ad would be considered acceptable or not.

“Those people are really just the very, very first pass,” Gaggle spokeswoman Paget Hetherington said. “It doesn’t really need training, it’s just like if there’s any possible doubt with that particular word or phrase it gets passed on.”

Molly McElligott, a former content moderator and customer service representative, said management was laser focused on performance metrics, appearing more interested in business growth and profit than protecting kids.

“I went into the experience extremely excited to help children in need,” McElligott wrote in an email. Unlike the contractors, McElligott was an employee at Gaggle, where she worked for five months in 2021 before taking a position at the Manhattan District Attorney’s Office in New York. “I realized that was not the primary focus of the company.”

Gaggle is part of a burgeoning campus security industry that’s seen significant business growth in the wake of mass school shootings as leaders scramble to prevent future attacks. Patterson, who founded the company in 1999 by offering student email accounts that could be monitored for pornography and cursing, said its focus now is mitigating the pandemic-driven youth mental health crisis.

Patterson said the team talks about “lives saved” and child safety incidents at every meeting, and they are open about sharing the company’s financial outlook so that employees “can have confidence in the security of their jobs.”

‘We are just expendable’

Under the pressure of new federal scrutiny along with three other companies that monitor students online, Gaggle executives recently told lawmakers it relies on a “highly trained content review team” to analyze student materials and flag safety threats. Yet former contractors, who make up the bulk of Gaggle’s content review team, described their training as “a joke,” consisting of a slideshow and an online quiz, that left them ill-equipped to complete a job with such serious consequences for students and schools.

As an employee on the company’s safety team, McElligott said she underwent two weeks of training but the disorganized instruction meant her and other moderators were “more confused than when we started.”

Former content moderators have also flocked to employment websites like Indeed.com to warn job seekers about their experiences with the company, often sharing reviews that resembled the former moderators’ feedback to The 74.

“If you want to be not cared about, not valued and be completely stressed/traumatized on a daily basis this is totally the job for you,” one anonymous reviewer wrote on Indeed. “Warning, you will see awful awful things. No they don’t provide therapy or any kind of support either.

“That isn’t even the worst part,” the reviewer continued. “The worst part is that the company does not care that you hold them on your backs. Without safety reps they wouldn’t be able to function, but we are just expendable.”

As the first layer of Gaggle’s human review team, contractors analyze materials flagged by the algorithm and decide whether to escalate students’ communications for additional consideration. Designated employees on Gaggle’s Safety Team are in charge of calling or emailing school officials to notify them of troubling material identified in students’ files, Patterson said.

Gaggle’s staunchest critics have questioned the tool’s efficacy and describe it as a student privacy nightmare. In March, Democratic Sens. Elizabeth Warren and Ed Markey urged greater federal oversight of Gaggle and similar companies to protect students’ civil rights and privacy. In a report, the senators said the tools could surveil students inappropriately, compound racial disparities in school discipline and waste tax dollars.

The information shared by the former Gaggle moderators with The 74 “struck me as the worst-case scenario,” said attorney Amelia Vance, the co-founder and president of Public Interest Privacy Consulting. Content moderators’ limited training and vetting, as well as their lack of backgrounds in youth mental health, she said, “is not acceptable.”

In its recent letter to lawmakers, Gaggle described a two-tiered review procedure but didn’t disclose that low-wage contractors were the first line of defense. CEO Patterson told The 74 they “didn’t have nearly enough time” to respond to lawmakers’ questions about their business practices and didn’t want to divulge proprietary information. Gaggle uses a third party to conduct criminal background checks on contractors, Patterson said, but he acknowledged they aren’t interviewed before getting placed on the job.

“There’s a lot of contractors. We can’t do a physical interview of everyone and I don’t know if that’s appropriate,” he said. “It might actually introduce another set of biases in terms of who we hire or who we don’t hire.”

‘Other eyes were seeing it’

In a previous investigation, The 74 analyzed a cache of public records to expose how Gaggle’s algorithm and content moderators subject students to relentless digital surveillance long after classes end for the day, extending schools’ authority far beyond their traditional powers to regulate speech and behavior, including at home. Gaggle’s algorithm relies largely on keyword matching and gives content moderators a broad snapshot of students’ online activities including diary entries, classroom assignments and casual conversations between students and their friends.

After the pandemic shuttered schools and shuffled students into remote learning, Gaggle oversaw a surge in students’ online materials and of school districts interested in their services. Gaggle reported a 20% bump in business as educators scrambled to keep a watchful eye on students whose chatter with peers moved from school hallways to instant messaging platforms like Google Hangouts. One year into the pandemic, Gaggle reported a 35% increase in references to suicide and self-harm, accounting for more than 40% of all flagged incidents.

Waskiewicz, who began working for Gaggle in January 2020, said that remote learning spurred an immediate shift in students’ online behaviors. Under lockdown, students without computers at home began using school devices for personal conversations. Sifting through the everyday exchanges between students and their friends, Waskiewicz said, became a time suck and left her questioning her own principles.

“I felt kind of bad because the kids didn’t have the ability to have stuff of their own and I wondered if they realized that it was public,” she said. “I just wonder if they realized that other eyes were seeing it other than them and their little friends.”

Student activity monitoring software like Gaggle has become ubiquitous in U.S. schools, and 81% of teachers work in schools that use tools to track students’ computer activity, according to a recent survey by the nonprofit Center for Democracy and Technology. A majority of teachers said the benefits of using such tools, which can block obscene material and monitor students’ screens in real time, outweigh potential risks.

Likewise, students generally recognize that their online activities on school-issued devices are being observed, the survey found, and alter their behaviors as a result. More than half of student respondents said they don’t share their true thoughts or ideas online as a result of school surveillance and 80% said they were more careful about what they search online.

A majority of parents reported that the benefits of keeping tabs on their children’s activity exceeded the risks. Yet they may not have a full grasp on how programs like Gaggle work, including the heavy reliance on untrained contractors and weak privacy controls revealed by The 74’s reporting, said Elizabeth Laird, the group’s director of equity in civic technology.

“I don’t know that the way this information is being handled actually would meet parents’ expectations,” Laird said.

Another former contractor, who reached out to The 74 to share his experiences with the company anonymously, became a Gaggle moderator at the height of the pandemic. As COVID-19 cases grew, he said he felt unsafe continuing his previous job as a caregiver for people with disabilities so he applied to Gaggle because it offered remote work.

About a week after he submitted an application, Gaggle gave him a key to kids’ private lives — including, most alarming to him, their nude selfies. Exposure to such content was traumatizing, the former moderator said, and while the job took a toll on his mental well-being, it didn’t come with health insurance.

“I went to a mental hospital in high school due to some hereditary mental health issues and seeing some of these kids going through similar things really broke my heart,” said the former contractor, who shared his experiences on the condition of anonymity, saying he feared possible retaliation by the company. “It broke my heart that they had to go through these revelations about themselves in a context where they can’t even go to school and get out of the house a little bit. They have to do everything from home — and they’re being constantly monitored.”

Gaggle employees are offered benefits, including health insurance, and can attend group therapy sessions twice per month, Hetherington said. Patterson acknowledged the job can take a toll on staff moderators, but sought to downplay its effects on contractors and said they’re warned about exposure to disturbing content during the application process. He said using contractors allows Gaggle to offer the service at a price school districts can afford.

“Quite honestly, we’re dealing with school districts with very limited budgets,” Patterson said. “There have to be some tradeoffs.”

The anonymous contractor said he wasn’t as concerned about his own well-being as he was about the welfare of the students under the company’s watch. The company lacked adequate safeguards to protect students’ sensitive information from leaking outside the digital environment that Gaggle built for moderators to review such materials. Contract moderators work remotely with limited supervision or oversight, and he became especially concerned about how the company handled students’ nude images, which are reported to school districts and the National Center for Missing and Exploited Children. Nudity and sexual content accounted for about 17% of emergency phone calls and email alerts to school officials last school year, according to Gaggle.

Contractors, he said, could easily save the images for themselves or share them on the dark web.

Patterson acknowledged the possibility but said he wasn’t aware of any data breaches.

“We do things in the interface to try to disable the ability to save those things,” Patterson said, but “you know, human beings who want to get around things can.”

‘Made me feel like the day was worth it’

Vara Heyman was looking for a career change. After working jobs in retail and customer service, she made the pivot to content moderation and a contract position with Gaggle was her first foot in the door. She was left feeling baffled by the impersonal hiring process, especially given the high stakes for students.

Waskiewicz had a similar experience. In fact, she said the only time she ever interacted with a Gaggle supervisor was when she was instructed to provide her bank account information for direct deposit. The interaction left her questioning whether the company that contracts with more than 1,500 school districts was legitimate or a scam.

“It was a little weird when they were asking for the banking information, like ‘Wait a minute is this real or what?’” Waskiewicz said. “I Googled them and I think they’re pretty big.”

Heyman said that sense of disconnect continued after being hired, with communications between contractors and their supervisors limited to a Slack channel.

Despite the challenges, several former moderators believe their efforts kept kids safe from harm. McElligott, the former Gaggle safety team employee, recalled an occasion when she found a student’s suicide note.

“Knowing I was able to help with that made me feel like the day was worth it,” she said. “Hearing from the school employees that we were able to alert about self-harm or suicidal tendencies from a student they would never expect to be suffering was also very rewarding. It meant that extra attention should or could be given to the student in a time of need.”

Susan Enfield, the superintendent of Highline Public Schools in suburban Seattle, said her district’s contract with Gaggle has saved lives. Earlier this year, for example, the company detected a student’s suicide note early in the morning, allowing school officials to spring into action. The district uses Gaggle to keep kids safe, she said, but acknowledged it can be a disciplinary tool if students violate the district’s code of conduct.

“No tool is perfect, every organization has room to improve, I’m sure you could find plenty of my former employees here in Highline that would give you an earful about working here as well,” said Enfield, one of 23 current or former superintendents from across the country who Gaggle cited as references in its letter to Congress.

“There’s always going to be pros and cons to any organization, any service,” Enfield told The 74, “but our experience has been overwhelmingly positive.”

True safety threats were infrequent, former moderators said, and most of the content was mundane, in part because the company’s artificial intelligence lacked sophistication. They said the algorithm routinely flagged students’ papers on the novels To Kill a Mockingbird and The Catcher in the Rye. They also reported being inundated with spam emailed to students, acting as human spam filters for a task that’s long been automated in other contexts.

Conor Scott, who worked as a contract moderator while in college, said that “99% of the time” Gaggle’s algorithm flagged pedestrian materials including pictures of sunsets and student’s essays about World War II. Valid safety concerns, including references to violence and self-harm, were rare, Scott said. But he still believed the service had value and felt he was doing “the right thing.”

McElligott said that managers’ personal opinions added another layer of complexity. Though moderators were “held to strict rules of right and wrong decisions,” she said they were ultimately “being judged against our managers’ opinions of what is concerning and what is not.”

“I was told once that I was being overdramatic when it came to a potential inappropriate relationship between a child and adult,” she said. “There was also an item that made me think of potential trafficking or child sexual abuse, as there were clear sexual plans to meet up — and when I alerted it, I was told it was not as serious as I thought.”

Patterson acknowledged that gray areas exist and that human discretion is a factor in deciding what materials are ultimately elevated to school leaders. But such materials, he said, are not the most urgent safety issues. He said their algorithm errs on the side of caution and flags harmless content because district leaders are “so concerned about students.”

The former moderator who spoke anonymously said he grew alarmed by the sheer volume of mundane student materials that were captured by Gaggle’s surveillance dragnet, and pressure to work quickly didn’t offer enough time to evaluate long chat logs between students having “heartfelt and sensitive” conversations. On the other hand, run-of-the-mill chatter offered him a little wiggle room.

“When I would see stuff like that I was like ‘Oh, thank God, I can just get this out of the way and heighten how many items per hour I’m getting,’” he said. “It’s like ‘I hope I get more of those because then I can maybe spend a little more time actually paying attention to the ones that need it.’”

Ultimately, he said he was unprepared for such extensive access to students’ private lives. Because Gaggle’s algorithm flags keywords like “gay” and “lesbian,” for example, it alerted him to students exploring their sexuality online. Hetherington, the Gaggle spokeswoman, said such keywords are included in its dictionary to “ensure that these vulnerable students are not being harassed or suffering additional hardships,” but critics have accused the company of subjecting LGBTQ students to disproportionate surveillance.

“I thought it would just be stopping school shootings or reducing cyberbullying but no, I read the chat logs of kids coming out to their friends,” the former moderator said. “I felt tremendous power was being put in my hands” to distinguish students’ benign conversations from real danger, “and I was given that power immediately for $10 an hour.”

A privacy issue

For years, student privacy advocates and civil rights groups have warned about the potential harms of Gaggle and similar surveillance companies. Fourteen-year-old Teeth Logsdon-Wallace, a Minneapolis high school student, fell under Gaggle’s watchful eye during the pandemic. Last September, he used a class assignment to write about a previous suicide attempt and explained how music helped him cope after being hospitalized. Gaggle flagged the assignment to a school counselor, a move the teen called a privacy violation.

He said it’s “just really freaky” that moderators can review students’ sensitive materials in public places like at basketball games, but ultimately felt bad for the contractors on Gaggle’s content review team.

“Not only is it violating the privacy rights of students, which is bad for our mental health, it’s traumatizing these moderators, which is bad for their mental health,” he said. Relying on low-wage workers with high turnover, limited training and without backgrounds in mental health, he said, can have consequences for students.

“Bad labor conditions don’t just affect the workers,” he said. “It affects the people they say they are helping.”

Gaggle cannot prohibit contractors from reviewing students’ private communications in public settings, Heather Durkac, the senior vice president of operations, said in a statement.

“However, the contractors know the nature of the content they will be reviewing,” Durkac said. “It is their responsibility and part of their presumed good and reasonable work ethic to not be conducting these content reviews in a public place.”

Gaggle’s former contractors also weighed students’ privacy rights. Heyman said she “went back and forth” on those implications for several days before applying to the job. She ultimately decided that Gaggle was acceptable since it is limited to school-issued technology.

“If you don’t want your stuff looked at, you can use Hotmail, you can use Gmail, you can use Yahoo, you can use whatever else is out there,” she said. “As long as they’re being told and their parents are being told that their stuff is going to be monitored, I feel like that is OK.”

Logsdon-Wallace and his mother said they didn’t know Gaggle existed until his classroom assignment got flagged to a school counselor.

Meanwhile, the anonymous contractor said that chat conversations between students that got picked up by Gaggle’s algorithm helped him understand the effects that surveillance can have on young people.

“Sometimes a kid would use a curse word and another kid would be like, ‘Dude, shut up, you know they’re watching these things,’” he said. “These kids know that they’re being looked in on,” even if they don’t realize their observer is a contractor working from the couch in his living room. “And to be the one that is doing that — that is basically fulfilling what these kids are paranoid about — it just felt awful.”

If you are in crisis, please call the National Suicide Prevention Lifeline at 1-800-273-TALK (8255), or contact the Crisis Text Line by texting TALK to 741741.

Disclosure: Campbell Brown is the head of news partnerships at Facebook. Brown co-founded The 74 and sits on its board of directors.

Get stories like these delivered straight to your inbox. Sign up for The 74 Newsletter