Survey: AI is Here, but Only California and Oregon Guide Schools on its Use

'We’re not seeing a lot of movement,' one researcher said of the reaction of most state education departments.

Get stories like these delivered straight to your inbox. Sign up for The 74 Newsletter

Artificial intelligence now has a daily presence in many teachers’ and students’ lives, with chatbots like ChatGPT, Khan Academy’s Khanmigo tutor and AI image generators like Ideogram.ai all freely available.

But nearly a year after most of us came face-to-face with the first of these tools, a new survey suggests that few states are offering educators substantial guidance on how to best use AI, let alone fairly and with appropriate privacy protections.

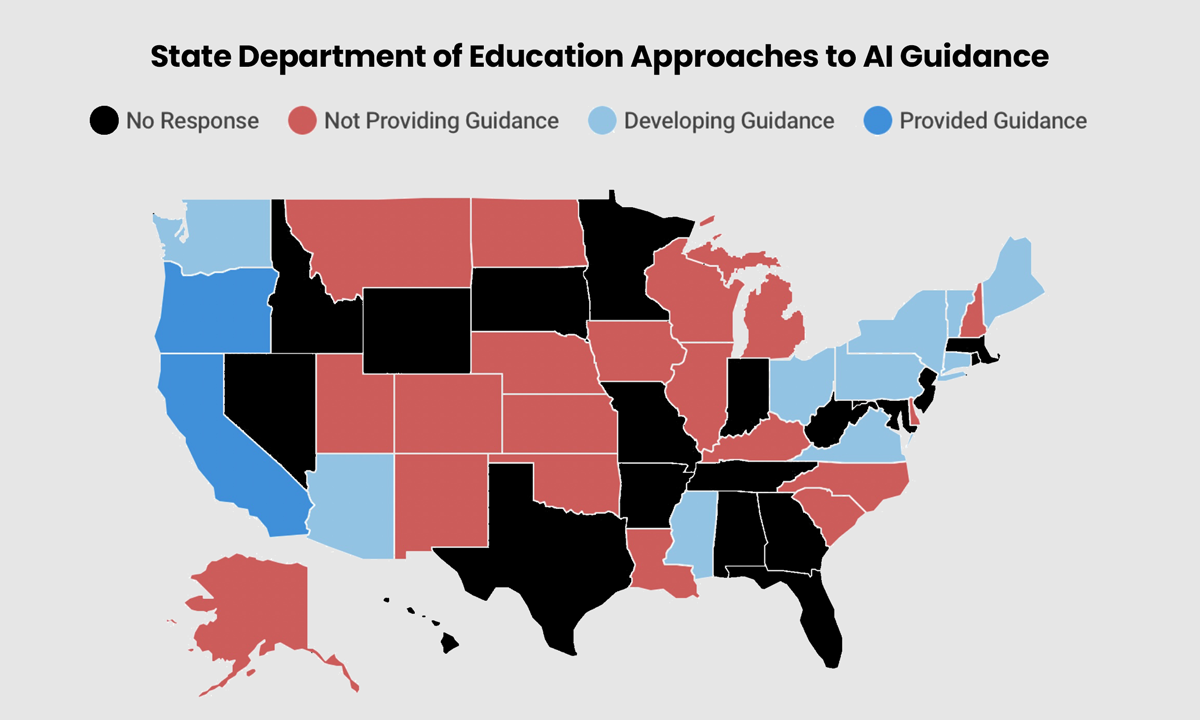

As of mid-October, just two states, California and Oregon, offered official guidance to schools on using AI, according to the Center for Reinventing Public Education at Arizona State University.

CRPE said 11 more states are developing guidance, but that another 21 states don’t plan to give schools guidelines on AI “in the foreseeable future.”

Seventeen states didn’t respond to CRPE’s survey and haven’t made official guidance publicly available.

As more schools experiment with AI, good policies and advice — or a lack thereof — will “drive the ways adults make decisions in school,” said Bree Dusseault, CRPE’s managing director. That will ripple out, dictating whether these new tools will be used properly and equitably.

“We’re not seeing a lot of movement in states getting ahead of this,” she said.

The reality in schools is that AI is here. Edtech companies are pitching products and schools are buying them, even if state officials are still trying to figure it all out.

“It doesn’t surprise me,” said Satya Nitta, CEO of Merlyn Mind, a generative AI company developing voice-activated assistants for teachers. “Normally the technology is well ahead of regulators and lawmakers. So they’re probably scrambling to figure out what their standard should be.”

Nitta said a lot of educators and officials this week are likely looking “very carefully” at Monday’s White House executive order on AI “to figure out what next steps are.”

The order requires, among other things, that AI developers share safety test results with the U.S. government and develop standards that ensure AI systems are “safe, secure, and trustworthy.”

It follows five months after the U.S. Department of Education released a detailed, 71-page guide with recommendations on using AI in education.

Deferring to districts

The fact that 13 states are at least in the process of helping schools figure out AI is significant. Last summer, no states offered such help, CRPE found. Officials in New York, Iowa, Rhode Island and Wyoming said decisions about many issues related to AI, such as academic integrity and blocking websites or tools, are made on the local level.

Still, researchers said, it’s significant that the majority of states still don’t plan AI-specific strategies or guidance in the 2023-24 school year.

There are a few promising developments: North Carolina legislation will soon require high school graduates to pass a computer science course. In Virginia, Gov. Glenn Youngkin in September issued an executive order on AI careers. And Pennsylvania Gov. Josh Shapiro signed an executive order in September to create a state governing board to guide use of generative AI, including developing training programs for state employees.

But educators need help understanding artificial intelligence, “while also trying to navigate its impact,” said Tara Nattrass, managing director of innovation strategy at the International Society for Technology in Education. “States can ensure educators have accurate and relevant guidance related to the opportunities and risks of AI so that they are able to spend less time filtering information and more time focused on their primary mission: teaching and learning.”

Beth Blumenstein, Oregon’s interim director of digital learning & well-rounded access, said AI is already being used in Oregon schools. And the state Department of Education has received requests from educators asking for support, guidance and professional development.

Generative AI is “a powerful tool that can support education practices and provide services to students that can greatly benefit their learning,” she said. “However, it is a highly complex tool that requires new learning, safety considerations, and human oversight.”

Three big issues she hears about are cheating, plagiarism and data privacy, including how not to run afoul of Oregon’s Student Information Protection Act or the federal Children’s Online Privacy and Protection Act.

‘Now I have to do AI?’

In August, CRPE conducted focus groups with 18 superintendents, principals and senior administrators in five states who said they were cautiously optimistic about AI’s potential, but many complained about navigating yet another new disruption.

“We just got through this COVID hybrid remote learning,” one leader told researchers. “Now I have to do AI?”

Nitta, Merlyn Mind’s CEO, said that syncs with his experience.

“Broadly, school districts are looking for some help, some guidance: ‘Should we use ChatGPT? Should we not use it? Should we use AI? Is it private? Are they in violation of regulations?’ It’s a complex topic. It’s full of all kinds of mines and landmines.”

And the stakes are high, he said. No educator wants to appear in a newspaper story about her school using an AI chatbot that feeds inappropriate information to students.

“I wouldn’t go so far as to say there’s a deer-caught-in-headlights moment here,” Nitta said, “but there’s certainly a lot of concern. And I do believe it’s the responsibility of authorities, of responsible regulators, to step in and say, ‘Here’s how to use AI safely and appropriately.’ ”

Get stories like these delivered straight to your inbox. Sign up for The 74 Newsletter